Essay: AI, Misinformation, and Trust in the Digital Era

In today’s media landscape, artificial intelligence has become a double-edged sword. On one hand, AI tools enable efficient information delivery and innovative storytelling; on the other hand, they accelerate misinformation in unprecedented ways. Deepfakes, algorithmically amplified content, and AI-generated text challenge traditional notions of trust, requiring professionals to adapt quickly to maintain credibility in their work.

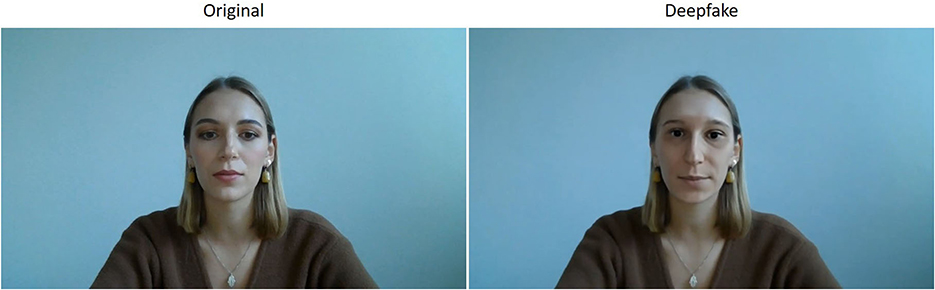

Consider deepfakes: realistic, AI-generated videos that can manipulate appearances, voices, and context. These tools are no longer confined to high-tech labs—they are accessible to anyone with a laptop and basic training. According to research from CEPR/VoxEU, AI-generated misinformation alters trust patterns and influences market demand for credible news. Professionals cannot simply rely on intuition; they must implement verification processes in their workflows.

Similarly, AI hallucinations—where large language models generate confident yet false statements—pose operational and reputational risks. IBM’s research explains that understanding hallucinations allows teams to design verification layers and set realistic expectations for outputs. SeniorExecutive emphasizes that these risks extend to board-level concerns, making governance and ethical oversight essential.

To translate these ideas into practice, this guide blends clear orientation strategies with professional-level verification techniques.

Ultimately, combating misinformation is not solely a technical problem. It is a human problem: humans read, share, and respond to content. Recognizing biases, implementing verification protocols, and educating teams can mitigate harm. For professionals in digital media and technology, mastering these skills is critical—not only for ethical responsibility, but also for sustaining trust, reputation, and influence in their fields.

MLA References:

- CEPR/VoxEU. “AI Misinformation and the Value of Trusted News.” 2025.

- IBM. “What Are AI Hallucinations?” IBM Think, 2025.

- UPenn Milton Wolf Seminar. “Media & Diplomacy.” 2025.

- SeniorExecutive. “AI Model Hallucinations: Risks.” 2025.

- Skywork. “I Tested 12 AI Humanizers — Bypassing Detection.” 2025.

- Here is a link to my zotero library with any additional sources you would like to dive into yourself.

Guide: Quick Orientation & Practical Steps

Recognize

Look for mismatched shadows, unnatural phrasing, or sources with vague credibility indicators. Misinformation often feels urgent or emotional.

Verify

- Check the original publisher.

- Use reverse-image search tools.

- Compare claims across reputable outlets.

Respond

Professionals should document verification steps and present corrections clearly and calmly. Transparency builds trust.

Interactive Network: How Misinformation Spreads

Click Simulate Spread to visualize how false information can ripple through interconnected networks.

Misinformation Lifecycle

This diagram illustrates the typical journey of a misinformation post.

Quiz: Spot the AI-Generated Text

Which of the following is most likely AI-generated?

Quiz: Verify the Source

Which headline seems the most trustworthy?

Compare: Original vs Altered

Recommended Images — download & add to your images/ folder

Click the links to get the original images and replace placeholders if you like.